Using mathematics to generate choreographic variations

Project Description

In a 1996 paper (Chaos 6:95-107), Diana Dabby (diana.dabby@olin.edu)

describes a technique that uses a chaotic mapping to generate variations on a

musical piece. The basic idea is to map the pitch sequence onto a chaotic trajectory;

this establishes a symbolic dynamics that links the attractor geometry and the structure

of the musical piece. One then generates a new chaotic trajectory and inverts

the mapping to generate a new pitch sequence. Sensitive dependence on initial

conditions guarantees that the variation is different from the original; the

attractor structure and the symbolic dynamics guarantee that the two resemble

one another in both esthetic and mathematical senses.

The program Chaographer implements a similar scheme for dance. The core of

the chaotic mapping technique is the same, but many of the issues and tactics -

together with much of the mathematics - are very different. The symbol set is

one obvious distinction. There is a simple, well-established notational scheme

for music, but body positions are much harder to represent; we use representational

techniques from rigid-body mechanics to solve this problem. The mathematics of the

mapping is also very different; Dabby uses a simple metric on a one-dimensional

projection of the trajectory to define cells, whereas we work with a full,

formal symbolic dynamics derived using computational geometry techniques.

The amount of human intervention that is required is also different. In Dabby’s

scheme, both input and output are pitch sequences; a human expert translates these

sequences to and from sound. Our variation generator takes an animation as input

and generates an animation as output.

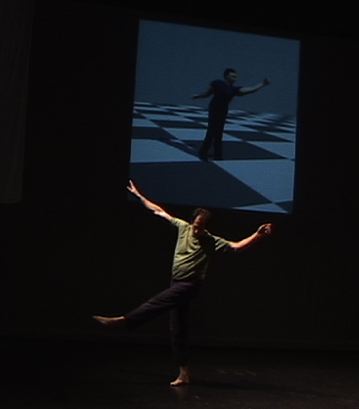

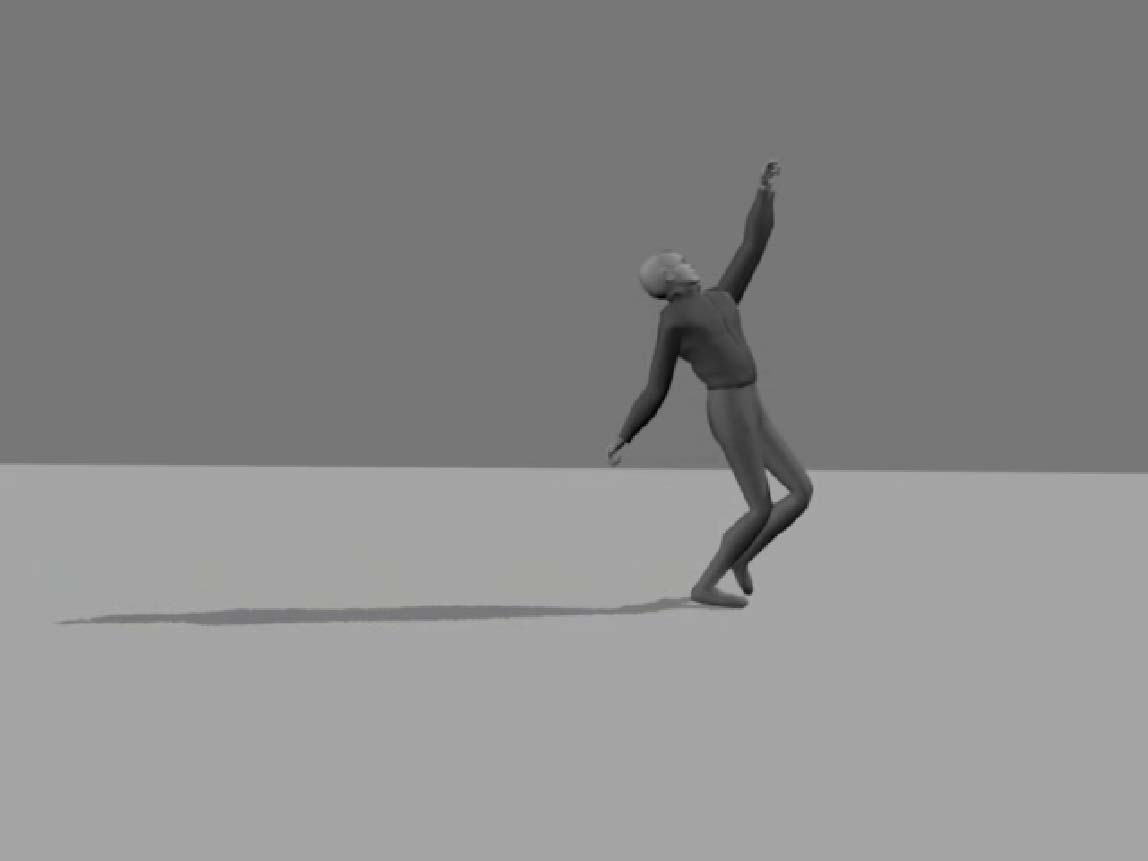

An original performance piece using six Chaographer variations and a human dancer,

entitled “Con/cantation: chaotic variations,” premiered in Boston in April of 2007,

and has appeared since then in Boulder and Santa Fe. A still shot appears at the

top of this page.

An original dance:

A chaotic variation on that piece:

Musical instruments can play arbitrary pitch sequences, but kinesiology and dance

style impose a variety of constraints on consecutive body postures. To smooth any

abrupt transitions introduced by the chaotic mapping, we have developed a class

of corpus-based interpolation schemes that capture and enforce the

dynamics of a dance genre.

The task of automatically generating stylistically consonant sequences

between arbitrary body postures is quite difficult. We use a corpus of human

movement to build a set of 44 weighted, directed graphs, one for each joint in

the human body. Each vertex is joint position and each edge represents a transition

between the two vertices that it links. Edges are weighted according to how often

the corresponding transitions are observed in the corpus. Interpolating between

two body positions is equivalent to finding the shortest ensemble-path through this

set of graphs between the states that correspond to those positions. We use A* search

to implement this, along with a special scoring function that enforces inter-joint

coordination constraints (i.e., that the position of the pelvis influences what

the hips are allowed to do.) These machine learning techniques are implemented

in a program called MotionMind.

Chaographer and MotionMind draw on techniques from nonlinear dynamics,

numerical analysis, graph theory, statistics, rigid-body mechanics, and machine

learning, as well as graphics and animation.

Animated Movement Sequences

If you're running Mac OSX and using the Safari browser, you can skip the OS/tool-specific "how to" info in the following paragraph, because Apple did it right and that combination just works.

Some of these clips are in avi format and some are in mpeg format. Your browser should understand both of these formats. On Macs and PCs, you can download them and play them using Sparkle or QuickTime. To play the mpeg files using QuickTime, you'll need the mpeg extension; see the QuickTime site to find out how to obtain the player and/or the mpeg extension if your browser doesn't handle it automatically. QuickTime is only available for Windows and Mac-OS; if you're on a Unix box, you'll need to use an mpeg viewer like mpeg_view. Click here for a page that has links to a variety of mpeg viewers for different architectures and operating systems.

Note that the performance of all movie-playing software degrades ungracefully if you're low on memory or if you have many other applications running.

The original sequences shown here were generated with the aid of a commercial human animation package called Life Forms. (Another good commercial figure animation package is Poser.)

Chaotic variations generated by Chaographer

- Some original pieces:

- macarena

- ballet adagio composed by Nadia Rojas-Adame

- kenpo karate kata

- medley: three ballet jumps, one kenpo kata, and twice through the macarena

- Variations generated by Chaographer using the Lorenz equations:

- chaotic macarena variation\

- chaotic variation of the ballet adagio: note the abrupt transitions...ouch!

- chaotic kenpo karate kata variation

- chaotic variation of the three-piece medley

- Variations generated by Chaographer using the Rossler equations:

- A randomly shuffled version of the medley, for contrast. Note how different this is from the two chaotic variations on the medley that appear above.

Interpolated "tweening" sequences generated by MotionMind:

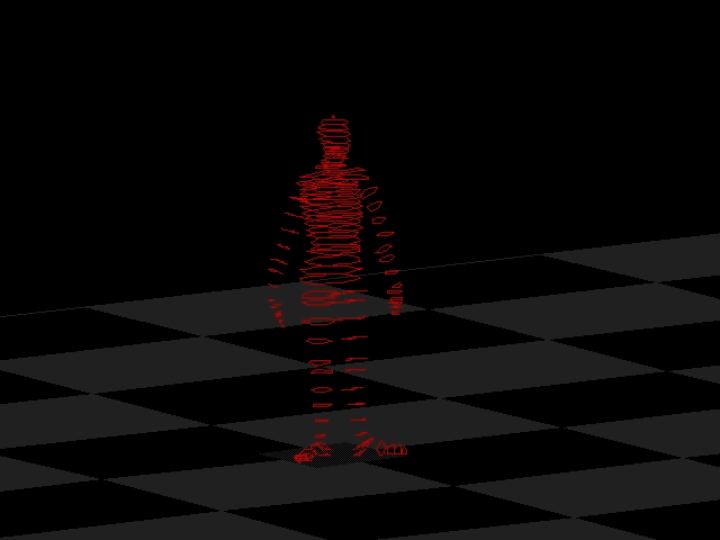

MotionMind finds stylistically consonant interpolation sequences between two positions. If it is given a corpus of ballet, for instance, and the following two poses:

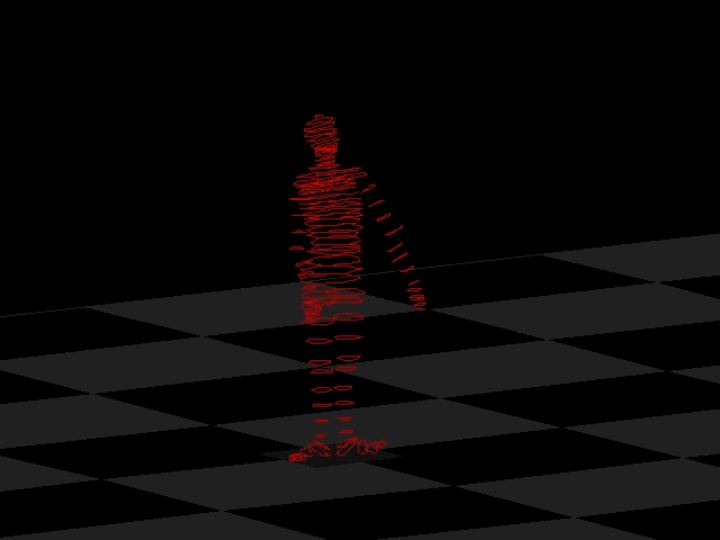

|

|

(These images show a red figure on a black background, and they don't show up well on some monitors. If you have problems making out the figure, please try changing your brightness and contrast, or turning out the room lights.)

Some out-takes that bring out interesting effects:

If the corpus that MotionMind uses is sparse, it may have trouble finding a path between a given pair of positions. This manifests in an interesting way: sequences that are stylistically consonant but very long. Presented with these two positions, for instance:

|

|

Coordination between joints is also an important constraint in human movement: one that, when violated, produces visibly awkward results. Click here to see an example of what happens if MotionMind is applied to a ballet corpus, but with its inter-joint coordination search heuristics disabled so it does not enforce coordination.

In order to build finite representations of these movement sequences, we had to discretize the angles of the joints. This is analogous to ``snapping'' objects to a grid in computer drawing applications, but it has some surprising effects when used to quantize human motion. The animation here shows a quantized version of a ballet adagio in blue, with the original dance superimposed upon it in red. Interestingly enough, side-by-side comparisons of the individual frames do not show up such striking differences. The human visual perception system appears to be very sensitive to small variations in joint angles in a moving figure: small changes seem to violate the "motif" of the motion.

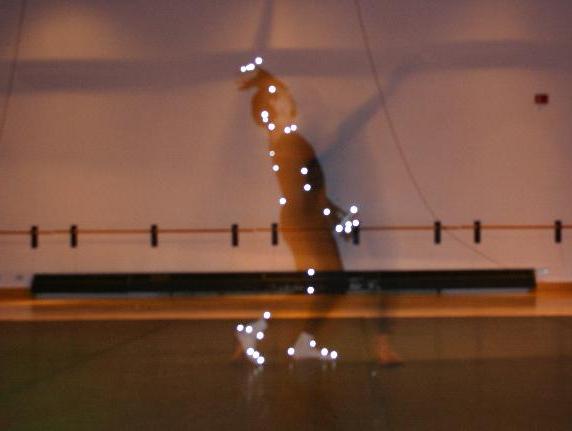

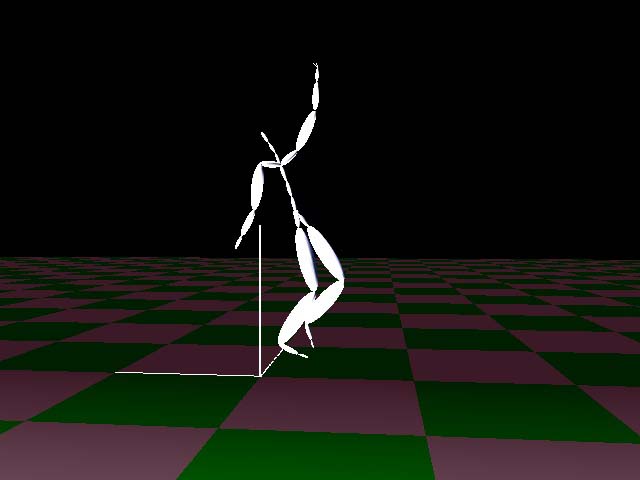

How to obtain better corpora: Motion capture

Though its results are quite satisfying, animating a sequence in Life Forms is a slow and painful process. We're currently working with Jessica Hodgins and the CMU motion-capture lab to obtain longer sequences and build richer corpora. This process involves sticking reflective balls to a dancer's joints, surrounding him or her with 12 cameras that take 120 snapshots per second, and then reconstructing a 3D model of the body position in each snapshot: |

|

|

People

- Liz Bradley, Professor of Computer Science.

- Josh Stuart, the originator of these ideas and the author of the corresponding programs, was an undergraduate research assistant with our group from 1/97 to 9/98 and is now on the faculty at UC Santa Cruz. He had a lot of help from Apollo Hogan, Stephen Schroeder, Diana Dabby, and a bunch of other people.

- David Capps, who is shown above with reflectors taped to his joints, is the director of the Dance division of the Department of Theater and Dance at the University of Colorado. He is the dance brains behind this project.

Papers and stuff

- E. Bradley, D. Capps, J. Luftig, and J. Stuart, "Towards Stylistic Consonance in Human Movement Synthesis," Open AI Journal 4:1-19 (2010). Extended version available as Department of Computer Science Technical Report CU-CS-1029-07.

- E. Bradley and J. Stuart, "Using Chaos to Generate Variations on Movement Sequences," Chaos, 8:800-807 (1998)

- J. Stuart and E. Bradley, "Learning the Grammar of Dance," Proceedings of the ICML (International Conference on Machine Learning), Madison WI; July 1998

- E. Bradley and J. Stuart, "Using Chaos to Generate Choreographic Variations," Proceedings of the Experimental Chaos Conference, Boca Raton FL; August 1997 (a preliminary version of the 1998 Chaos paper listed above)

- E. Bradley, D. Capps, and A. Rubin, "Can Computers Learn to Dance?," International Conference on Dance and Technology, Tempe, AZ; February 1999 (written for the dance world)

- E. Bradley and J. Stuart, "Optimization and Human Movement," Newsletter of the SIAM Activity Group on Optimization 12(1) (2001) (a short version written for the numerical community)

- An original performance piece entitled Con/cantation, which was based on Chaographer's variations of an original dance composed by David Capps, premiered in Boston in April 2007.

Links

- Jessica Hodgins's group at CMU is working on an physics-based approach to generating animation sequences (which is much harder than what we're doing, as well as really neat).

- Demetri Terzopoulos's group at Toronto is working on a neural-net based approach to generating animation sequences.

- The Dance Notation Bureau and a nice tutorial about Labanotation, a written form of dance notation.

- UP, a collaboration between Pilobolus and the MIT CSAIL.

Support

- The National Science Foundation (NYI #CCR-9357740

- A Packard Fellowship in Science and Engineering from the David and Lucile Packard Foundation

- A Dean's Small Grant from the College of Engineering and Applied Sciences at the University of Colorado

- A Radcliffe Fellowship from the Radcliffe Institute for Advanced Study, one of the truly wonderful places in the world

- Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of these organizations.